Report Overview

Canada Responsible AI Market Highlights

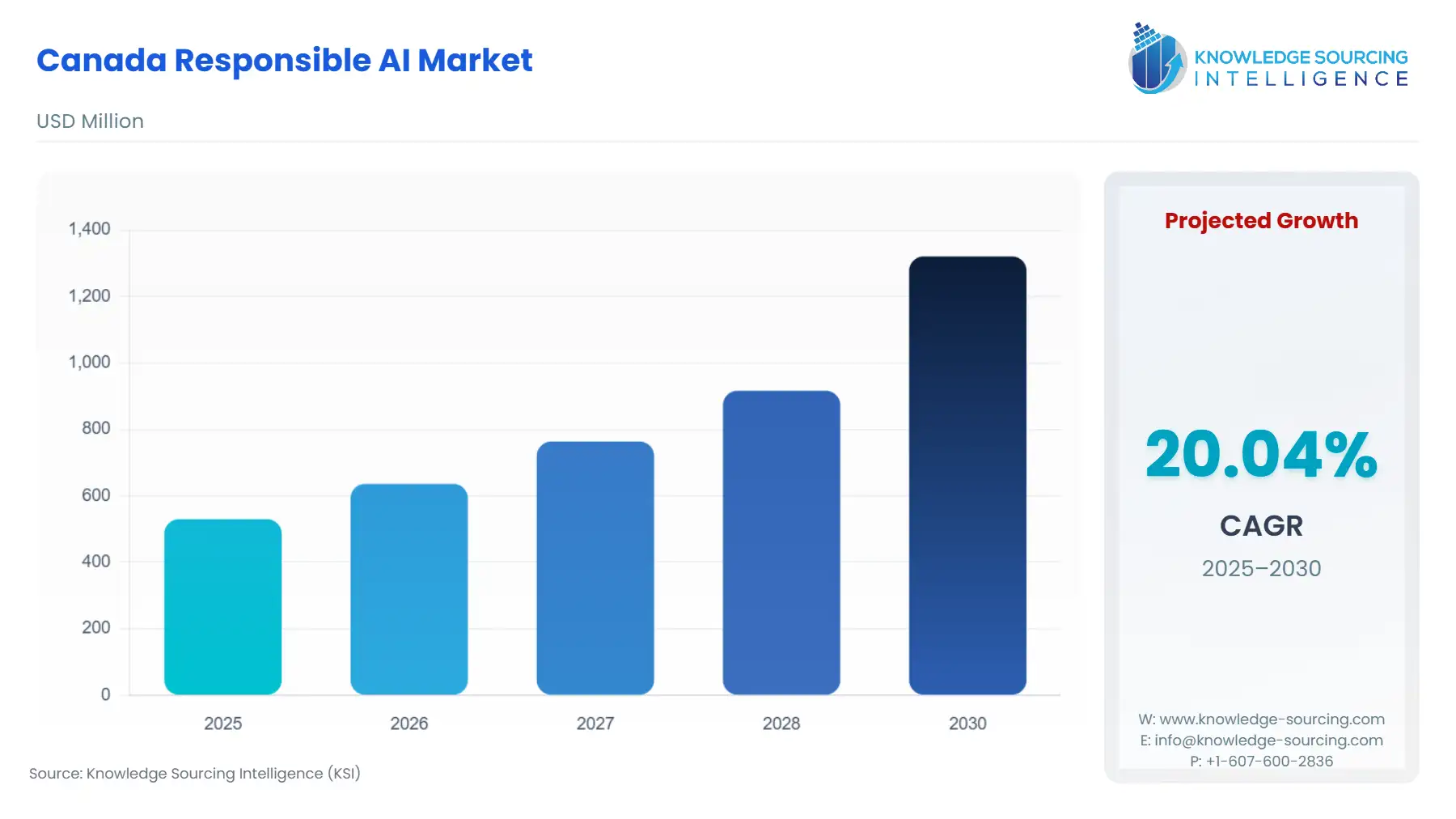

Canada Responsible AI Market Size:

The Canada Responsible AI Market is expected to grow at a CAGR of 20.04%, reaching a market size of USD 1.321 billion in 2030 from USD 0.530 billion in 2025.

The Canadian market for Responsible AI is rapidly moving from a niche academic and ethical concern to a commercial imperative. Responsible AI, or trustworthy AI, encompasses the principles and practices that ensure AI systems are developed and used in a way that is fair, transparent, accountable, and safe. In Canada, a nation with a deep commitment to human rights and a burgeoning AI ecosystem, the market’s growth is not merely a technological trend but a direct response to a complex confluence of governmental, legal, and public pressures. The market’s trajectory is inextricably linked to the federal government's policy actions and investments, which are creating both a demand for responsible AI solutions and a supply of domestic expertise.

Canada Responsible AI Market Analysis:

- Growth Drivers

The Responsible AI market in Canada is propelled by a combination of impending regulatory requirements and a growing awareness of the reputational and financial risks of deploying unethical AI. A primary catalyst is the Canadian government's move toward a formal regulatory framework, most notably through the proposed Artificial Intelligence and Data Act (AIDA). While AIDA was not yet law as of late 2024, its stated risk-based approach signals a clear future direction, creating a strong market pull for tools and services that enable compliance. Companies across all sectors are now seeking solutions to conduct algorithmic impact assessments, mitigate bias, and provide explainability to preemptively align with these future requirements. This regulatory pressure directly increases demand for software platforms and consulting services focused on AI governance and risk management.

Further stimulating growth is the federal government's strategic investment in AI infrastructure and safety. Budget 2024 allocated a significant $2.4 billion package, which includes $50 million for the creation of the Canadian AI Safety Institute (CAISI). CAISI's mandate to advance the science of AI safety and work with international partners validates the importance of responsible AI and signals to the private sector that safety and ethics are not optional add-ons but core components of AI development. This public sector endorsement encourages enterprises to invest in responsible AI tools, as it reduces perceived risk and aligns their strategies with national priorities. The institutional recognition of responsible AI as a competitive advantage rather than a compliance burden directly expands the market.

- Challenges and Opportunities

The Responsible AI market in Canada faces significant headwinds, primarily due to regulatory uncertainty. The non-ratification of AIDA and the current reliance on voluntary codes of conduct and non-binding principles for advanced generative AI systems create a fragmented and uncertain landscape. This lack of a clear, enforceable federal law can slow market adoption, as businesses may be hesitant to invest heavily in compliance tools for regulations that are still in flux. The high cost of specialized talent, including AI ethics professionals and data scientists with a focus on bias mitigation, also serves as a constraint, particularly for small and medium-sized enterprises (SMEs) that may not have the resources to build dedicated internal teams.

Despite these challenges, the regulatory ambiguity and the growing public and corporate scrutiny of AI provide a significant opportunity. The market has an opportunity to develop and offer specialized software tools and services that are agile and adaptable to evolving regulations. This creates a strong demand for "RegTech" solutions in the AI space—platforms that can be quickly updated to align with new legal requirements. Additionally, the need for skilled talent creates a niche for professional services. Firms that provide AI auditing, ethical impact assessments, and training can address the talent gap faced by many organizations, thereby creating a robust service-based segment of the market. The opportunity is to provide clarity and structured solutions in a landscape that is currently fragmented and complex.

- Supply Chain Analysis

The supply chain for Responsible AI is predominantly intangible and knowledge-based. It is not a physical supply chain but a value network of talent, data, and intellectual property. The "production hubs" are Canada's world-leading AI research institutes, including Mila (Montreal), the Vector Institute (Toronto), and Amii (Edmonton). These institutes, supported by the Pan-Canadian Artificial Intelligence Strategy, serve as the primary source of fundamental research and highly specialized talent. The dependencies in this supply chain are centered on the continuous flow of public and private funding, access to diverse and high-quality datasets for training and testing, and the ability to attract and retain top-tier AI ethics researchers and engineers. The logistical complexity lies in the collaboration between disparate entities—academic researchers, government agencies, and technology firms—to translate theoretical ethical frameworks into commercial, scalable software products.

- Government Regulations:

The Canadian government is actively shaping the responsible AI market through a combination of policy directives and planned legislation.

|

Jurisdiction |

Key Regulation / Agency |

Market Impact Analysis |

|

Federal Government |

Artificial Intelligence and Data Act (AIDA) |

AIDA's proposed risk-based framework, which was included in Bill C-27, is the most significant demand-side driver. Although not yet law, the prospect of this legislation compels businesses to invest in responsible AI software and services. The requirement for algorithmic impact assessments and risk mitigation for high-impact AI systems directly creates demand for auditing and governance platforms that enable compliance. |

|

Federal Government |

Canadian AI Safety Institute (CAISI) |

Established in late 2024, CAISI's purpose is to advance the science of AI safety. This initiative, with its dedicated funding, creates a market for research and development focused on technical safety solutions. It signals to the private sector that the government values and will support the creation of safe and responsible AI, thereby reducing the perception of risk in a nascent market. |

|

Ontario Provincial Government |

Trustworthy Artificial Intelligence (AI) Framework |

Ontario's framework, effective December 1, 2024, is the first provincial directive to establish public sector guardrails for AI use. Its requirement for AI risk management and disclosure mandates the use of responsible AI principles in government projects, which in turn creates a precedent and a local demand for private sector AI providers to demonstrate their own responsible AI capabilities and tools. |

Canada Responsible AI Market Segment Analysis:

- By Component: Software Tools & Platforms

The software tools and platforms segment represents the most significant and rapidly growing portion of the Canadian Responsible AI market. This segment’s growth is driven by the operational need for concrete, scalable solutions that can automate the complex processes of responsible AI implementation. As regulatory pressure mounts, companies are moving beyond manual audits and seeking software platforms that provide automated bias detection, explainability features, and real-time monitoring of AI models. These platforms are demanded by data science teams and compliance officers who need to embed ethical principles directly into the AI development lifecycle. For instance, in the banking sector (BFSI), a platform that can automatically flag a loan application model for gender or racial bias before it is deployed is no longer a luxury but an essential risk mitigation tool. This need for end-to-end, integrated solutions is a direct result of the need to operationalize ethical guidelines and frameworks at scale. The market is propelled by a shift from a reactive, post-deployment mindset to a proactive, "by-design" approach to AI development.

- By End-User: BFSI (Banking, Financial Services, and Insurance)

The BFSI sector is a primary growth driver for Responsible AI services in Canada. This demand is a direct result of the high-stakes, consumer-facing nature of the industry and the stringent regulatory environment already in place. Financial institutions are using AI for credit scoring, fraud detection, and automated underwriting, and the potential for algorithmic bias or a lack of transparency poses significant legal, financial, and reputational risks. The sector's requirement is focused on solutions that provide auditability and explainability. Regulators and customers alike require that a financial institution can explain why an AI system made a specific decision, such as denying a credit application. This creates a strong market pull for specialized responsible AI services, including third-party auditing and model validation. These services help financial institutions demonstrate compliance with existing privacy laws and preemptively align with future responsible AI legislation, thereby building trust with their customer base and reducing their legal exposure.

Canada Responsible AI Market Competitive Analysis:

The Canadian Responsible AI market is a competitive ecosystem with a mix of startups and established global consultancies. The primary competitive advantage is a deep specialization in specific ethical challenges and a strong portfolio of successfully implemented projects.

- Integrate.ai: A Canadian company, Integrate.ai's strategic positioning is centered on its platform that enables the responsible use of consumer data for personalization. Their core product is designed to allow businesses to apply machine learning to data without revealing personal information, which addresses a key tenet of responsible AI: privacy and data protection. This focus on privacy-enhancing technologies directly serves the demand from end-users, particularly in the telecommunications and retail sectors, who want to leverage data for business insights while maintaining customer trust and complying with privacy regulations.

- Vector Institute: Similar to Mila, the Vector Institute is a key player not as a direct commercial competitor but as a foundational research hub. Its strategic role is to produce top-tier AI talent and research, including in the area of AI ethics and governance. By collaborating with industry partners, the Vector Institute helps to translate academic research into practical, commercially viable responsible AI solutions. Its influence on the market is by supplying the intellectual capital and skilled workforce that new ventures and established companies need to build their own responsible AI capabilities.

Canada Responsible AI Market Developments:

- July 2025: The Canadian Artificial Intelligence Safety Institute (CAISI) was formally established as part of the Government of Canada's initiative to support the safe and responsible development of AI. This development provides a dedicated institutional framework to address the risks of advanced AI systems and creates a new hub for AI safety research and policy.

- July 2025: The Government of Canada, through its AI Sovereign Compute Strategy, launched the AI Compute Access Fund with an investment of up to $300 million to help Canadian innovators and businesses access high-performance computing. This initiative indirectly supports the responsible AI market by making it more economically feasible for Canadian companies to train and test large-scale AI models responsibly and securely within Canada's borders.

Canada Responsible AI Market Scope:

| Report Metric | Details |

|---|---|

| Total Market Size in 2026 | USD 0.530 billion |

| Total Market Size in 2031 | USD 1.321 billion |

| Growth Rate | CAGR during the forecast period |

| Study Period | 2021 to 2031 |

| Historical Data | 2021 to 2024 |

| Base Year | 2025 |

| Forecast Period | 2026 – 2031 |

| Segmentation | Components, Deployment, End-User |

| Companies |

|

Canada Responsible AI Market Segmentation:

- BY COMPONENT

- Software Tools & Platforms

- Services

- BY DEPLOYMENT

- On-Premises

- Cloud

- BY END-USER

- Healthcare

- BFSI

- Government and Public Sector

- Automotive Industry

- IT and Telecommunication

- Others