Report Overview

France Responsible AI Market Highlights

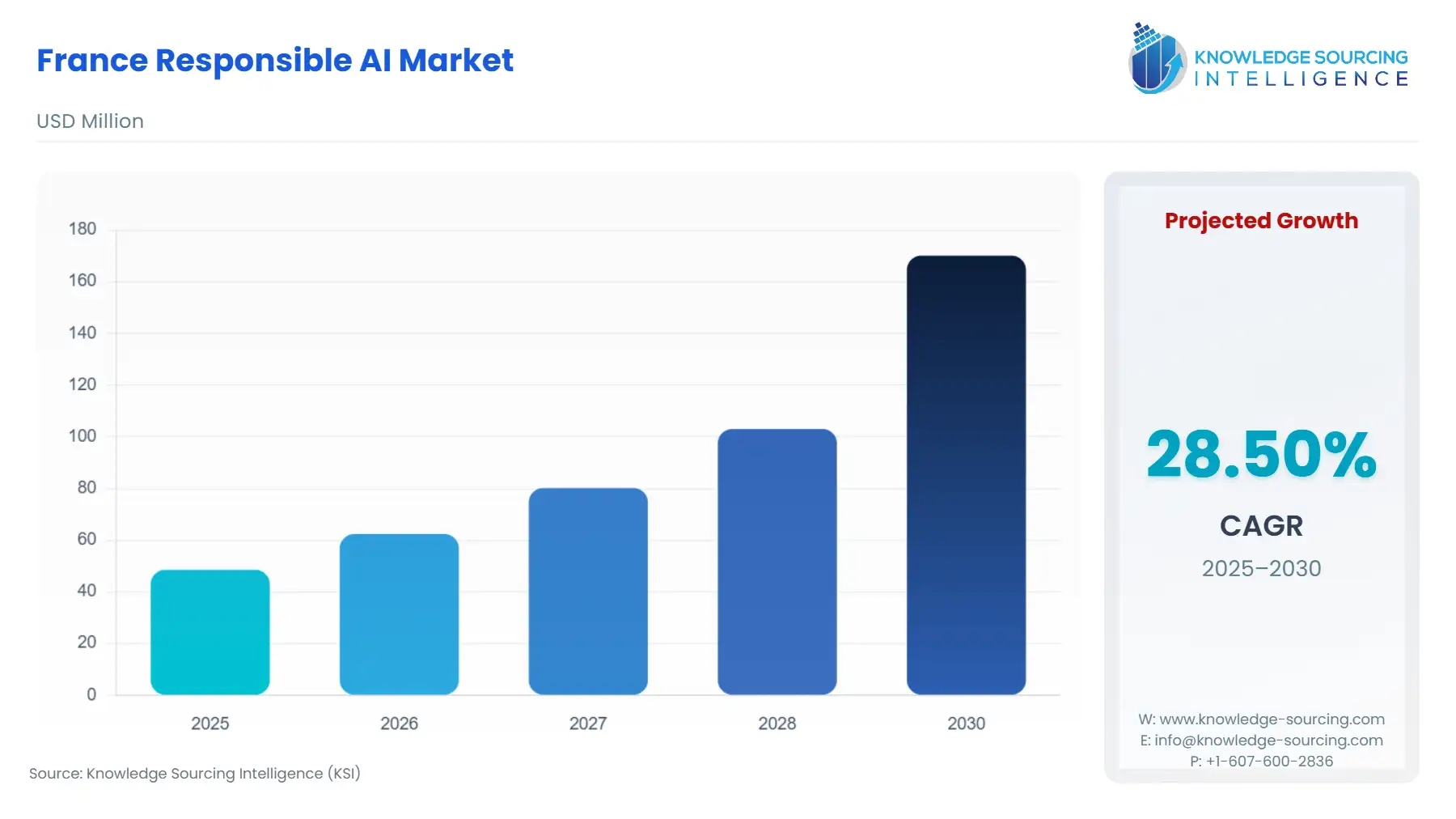

France Responsible AI Market Size:

The France Responsible AI Market is expected to grow at a CAGR of 28.50%, reaching USD 170.163 million in 2030 from USD 48.568 million in 2025.

The French market for Responsible AI is an ecosystem shaped primarily by a proactive regulatory landscape and a national strategic imperative. Responsible AI, encompassing principles of fairness, transparency, accountability, and security, is no longer a niche consideration but a fundamental requirement for the development and deployment of AI systems. In France, this market's trajectory is directly tied to the European Union's broader regulatory agenda, most notably the EU AI Act. This legislative action, combined with France's own National Strategy for AI, has created a unique market dynamic where ethical considerations are a direct catalyst for commercial demand. Companies operating in this environment are not just motivated by corporate social responsibility but by a clear legal and reputational imperative to ensure their AI technologies are trustworthy.

France Responsible AI Market Analysis:

- Growth Drivers

The responsible AI market in France is a direct consequence of a strong regulatory push from the European Union and national policy. The forthcoming EU AI Act, which categorizes AI applications by risk, is a primary catalyst. High-risk AI systems, such as those used in healthcare, law enforcement, and critical infrastructure, will be subject to stringent legal requirements concerning data governance, transparency, and human oversight. This regulatory framework creates a non-negotiable demand for software tools and services that enable organizations to perform risk assessments, conduct fundamental rights impact assessments, and maintain technical documentation to demonstrate compliance. As the EU AI Act transitions from proposal to implementation, businesses are compelled to invest in responsible AI solutions to ensure market access and avoid severe penalties.

Additionally, France's National Strategy for AI (SNIA), which has received significant government funding, actively promotes the development of "embedded and trustworthy AI." The establishment of entities like the National Institute for the Evaluation and Security of Artificial Intelligence (INESIA) in February 2025 further drives this demand. INESIA's role in coordinating national efforts on AI security risk analysis and regulatory implementation creates a need for solutions that align with these national standards. The combination of mandatory compliance driven by the EU and strategic, funded national initiatives creates a robust dual demand for responsible AI, moving it from a theoretical concept to a business and legal necessity.

- Challenges and Opportunities

The primary challenge facing the French Responsible AI market is the technical and financial complexity of achieving compliance. Implementing responsible AI principles, such as ensuring fairness, mitigating bias, and providing model explainability, requires specialized software tools and expertise that are often costly and technically challenging to integrate into existing systems. This presents a significant hurdle for small and medium-sized enterprises (SMEs) that may lack the resources to meet these arduous requirements. Without accessible and affordable solutions, these companies risk being left behind, creating a market friction point.

This challenge, however, presents a significant opportunity. The market is now poised for the growth of a new class of enterprise software. There is a strong opportunity for companies to develop and commercialize tools that simplify the compliance process. Solutions that offer automated bias detection, explainable AI (XAI) modules, and comprehensive auditing platforms are in high demand. These services can transform a complex regulatory burden into a manageable, integrated part of the AI development lifecycle. Companies that can provide intuitive, cost-effective, and scalable responsible AI solutions will be well-positioned to capitalize on this opportunity, particularly by targeting the large segment of SMEs and enterprises that need to comply with the EU AI Act but lack internal capabilities.

- Supply Chain Analysis

The supply chain for the French Responsible AI market is intangible and knowledge-based, consisting of a network of academic institutions, specialized software firms, and professional service providers. Unlike physical products, its key "components" are intellectual property, human expertise, and computational resources. The supply chain's hubs are research clusters and AI centers, such as those in Paris and Grenoble, which generate foundational research on AI ethics and safety. Its dependencies are on the availability of highly skilled data scientists, ethicists, and legal experts who can translate responsible AI principles into practical, auditable code and policy. The logistical complexity lies in the collaboration between these diverse stakeholders—academics providing the theoretical framework, software developers building the tools, and consultants applying them to client-specific contexts. The supply of talent is a critical constraint on market growth.

France Responsible AI Market Government Regulations:

The French government and European Union have directly impacted the market through a series of foundational regulations and strategic initiatives.

| Jurisdiction | Key Regulation / Agency | Market Impact Analysis |

|---|---|---|

| European Union | EU AI Act | This is the primary regulatory driver. By classifying AI systems into risk categories, the Act creates a direct, segmented demand for responsible AI solutions. High-risk systems must meet strict requirements for data governance, documentation, and human oversight, creating a compulsory market for compliance tools and services. Companies that fail to comply risk significant fines, making responsible AI a legal and financial imperative. |

| France | Commission Nationale de l'Informatique et des Libertés (CNIL) | The CNIL's role as France's data protection authority directly influences the responsible AI market. Its "soft law" approach, through the issuance of guidance and recommendations on GDPR and AI, particularly regarding personal data processing, creates specific demand for privacy-by-design and transparent AI systems. The CNIL's past enforcement actions, such as the fines against Clearview AI, underscore the legal risks of non-compliance and further compel organizations to invest in responsible AI. |

France Responsible AI Market Segment Analysis:

- By Component: Software Tools & Platforms

The software tools and platforms segment is a major growth driver in the French Responsible AI market, propelled by the need for automated solutions to manage complex regulatory requirements. The sheer volume of data and the intricacy of modern AI models make manual oversight impractical. This has created a significant market for specialized software that can automate key aspects of responsible AI. These platforms offer features such as model fairness and bias detection, which automatically scan for and report on demographic biases in datasets and model outputs. They also provide explainable AI (XAI) capabilities, allowing developers and regulators to understand how an AI system arrives at a particular decision. Furthermore, these tools offer comprehensive auditing and documentation features, which are critical for demonstrating compliance with the EU AI Act's rigorous requirements. The need for these platforms is directly proportional to the increasing adoption of AI across regulated industries, as companies seek efficient, scalable ways to ensure their systems are transparent, fair, and compliant.

- By End-User: Government and Public Sector

The government and public sector is a critical end-user segment for the France Responsible AI market, driven by the imperative to ensure fairness, transparency, and accountability in public service. The use of AI in areas like public administration, justice, and social services carries a high degree of public trust and legal risk. The French government's commitment to "AI for Humanity" and its national strategy to position France as a leader in trustworthy AI create a direct demand for responsible AI solutions. Government agencies are deploying AI to optimize services and make data-driven decisions, but they must do so in a way that respects citizen rights and avoids algorithmic discrimination. This fuels the need for solutions that can audit and validate the fairness of AI systems used for public policy or citizen-facing applications. The government's own adoption of these technologies, coupled with the CNIL's oversight, validates the market and creates a precedent for other sectors to follow, strengthening the overall market.

France Responsible AI Market Competitive Analysis:

The competitive landscape for responsible AI in France is fragmented, featuring a mix of large international professional services firms, domestic technology companies, and research-driven startups. The competition is centered on expertise, technological differentiation, and a proven ability to deliver auditable, compliant solutions.

- Capgemini: Capgemini's strategic positioning in the responsible AI market is defined by its focus on providing a full suite of professional services, from advisory and strategy to implementation and governance. The company is actively addressing the ethical and sustainability aspects of AI, as evidenced by its partnership with the World Economic Forum on the AI Governance Alliance and its focus on "Generative AI: Accelerating sustainability through responsible innovation." Capgemini's approach is to provide comprehensive, end-to-end solutions that help clients navigate the complexities of regulation and ethical implementation.

- Thales: Thales leverages its core competencies in security and defense to position itself as a provider of "Digital Trust" for AI systems. The company's focus is on ensuring the security and integrity of data and AI-driven processes, which are foundational components of responsible AI. Thales's T-Sure platform, for example, is designed to manage the security and data integrity of connected systems. By focusing on cybersecurity and trusted data environments, Thales addresses a critical component of the responsible AI landscape, particularly for high-stakes applications in regulated industries like government and defense.

France Responsible AI Market Developments:

- September 2025: Accenture announced its intent to acquire the French Orlade Group. This acquisition would expand Accenture's capital project management capabilities, with a specific focus on leveraging advanced technologies like generative AI to drive "responsible transformation." The move highlights a strategic effort by a major consulting firm to integrate responsible AI as a core component of its service offerings for large-scale industrial projects.

- July 2025: The French data protection authority, CNIL, finalized new recommendations on the development of AI systems. The guidance provides concrete solutions for preventing personal data processing in AI models, such as implementing robust filters. This development underscores the regulatory pressure on companies to build privacy-by-design AI systems and creates a clear demand for tools and services that enable compliance with these recommendations.

France Responsible AI Market Scope:

| Report Metric | Details |

|---|---|

| Total Market Size in 2026 | USD 48.568 million |

| Total Market Size in 2031 | USD 170.163 million |

| Growth Rate | 28.50% |

| Study Period | 2021 to 2031 |

| Historical Data | 2021 to 2024 |

| Base Year | 2025 |

| Forecast Period | 2026 – 2031 |

| Segmentation | Component, Deployment, End-User |

| Companies |

|

France Responsible AI Market Segmentation:

- BY COMPONENT

- Software Tools & Platforms

- Services

- BY DEPLOYMENT

- On-Premises

- Cloud

- BY END-USER

- Healthcare

- BFSI

- Government and Public Sector

- Automotive Industry

- IT and Telecommunication

- Others