Exploring the Key Tools of Ethical AI

Fairness, accountability, openness, and the welfare of society are given top priority in ethical AI. It guarantees that AI systems protect privacy, don't have prejudices, and respect human rights. Diverse viewpoints, thorough testing, and ongoing oversight are all necessary for ethical AI design to minimize potential risks. It encourages the ethical application of AI technologies, building confidence among developers, consumers, and impacted communities. In the end, ethical AI seeks to match technical advancement with moral standards and social ideals to enhance humanity rather than exploit it.

Top Ethical AI Tools

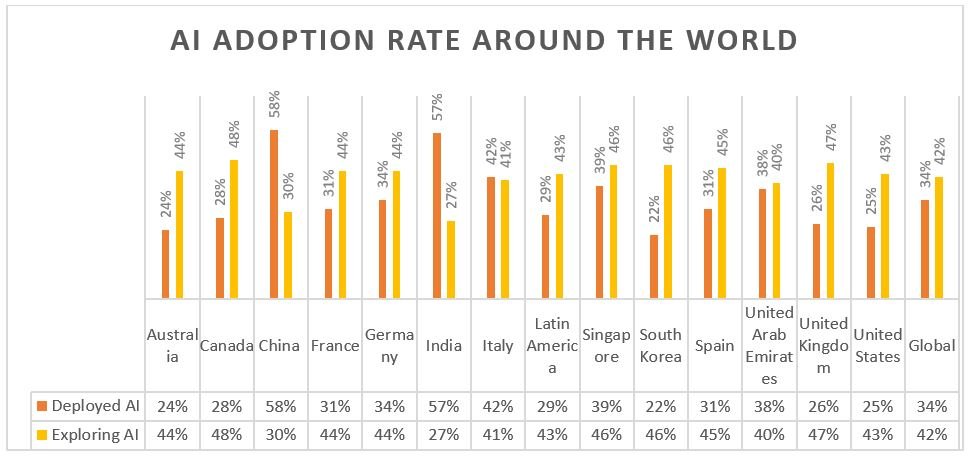

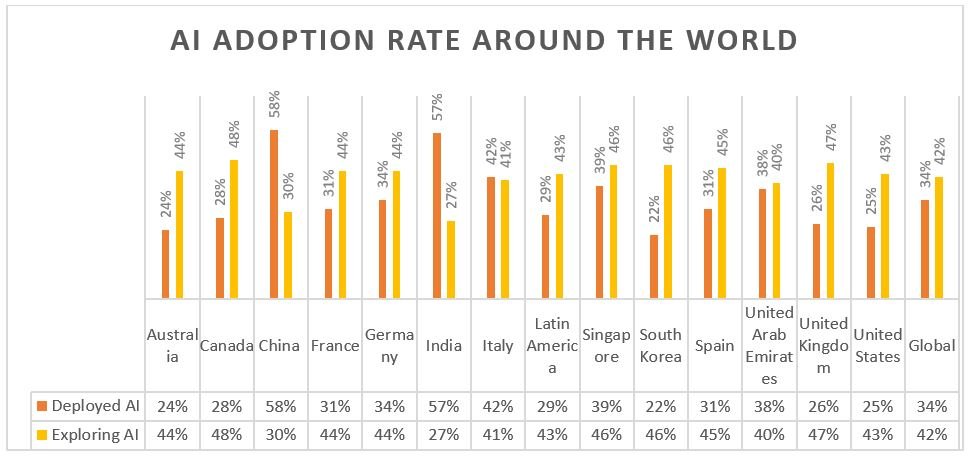

Source: IBM

5. Diversity, non-discrimination, and bias mitigation

AI programs should interact with a wide range of users to prevent inadvertent bias. If AI is allowed to run amok, it may result in bias or marginalization of some populations. Because not all groups are represented in the development of AI and because not all groups may use AI in the same way or to the same extent, bias might be challenging to eradicate. One crucial first step in reducing discrimination is to encourage inclusion and guarantee that everyone has access to it. Throughout the development and deployment phases, bias can be further mitigated by ongoing evaluations and modifications. Data publication can also encourage the use of impartial AI. It has also been demonstrated that utilizing a variety of data sets reduces the likelihood of bias.

Industry titans including Block, Canva, Carlyle, The Estée Lauder Companies, PwC, and Zapier are already utilizing Open AI's ChatGPT Enterprise, which was introduced a few months ago, to completely transform the way their businesses run. Introducing ChatGPT Team as a new self-serve option today. Access to sophisticated models, such as GPT-4 and DALL·E 3, and tools, such as Advanced Data Analysis, is provided by the chatGPT Team. It also comes with admin tools to manage a team and a specific collaborative workspace. One can own and control business data, just like with ChatGPT Enterprise.

Find some of our related studies:

Source: IBM

5. Diversity, non-discrimination, and bias mitigation

AI programs should interact with a wide range of users to prevent inadvertent bias. If AI is allowed to run amok, it may result in bias or marginalization of some populations. Because not all groups are represented in the development of AI and because not all groups may use AI in the same way or to the same extent, bias might be challenging to eradicate. One crucial first step in reducing discrimination is to encourage inclusion and guarantee that everyone has access to it. Throughout the development and deployment phases, bias can be further mitigated by ongoing evaluations and modifications. Data publication can also encourage the use of impartial AI. It has also been demonstrated that utilizing a variety of data sets reduces the likelihood of bias.

Industry titans including Block, Canva, Carlyle, The Estée Lauder Companies, PwC, and Zapier are already utilizing Open AI's ChatGPT Enterprise, which was introduced a few months ago, to completely transform the way their businesses run. Introducing ChatGPT Team as a new self-serve option today. Access to sophisticated models, such as GPT-4 and DALL·E 3, and tools, such as Advanced Data Analysis, is provided by the chatGPT Team. It also comes with admin tools to manage a team and a specific collaborative workspace. One can own and control business data, just like with ChatGPT Enterprise.

Find some of our related studies:

- Privacy

- Accountability

- Human Oversight

- Transparency

- Diversity, non-discrimination, and bias mitigation

Source: IBM

5. Diversity, non-discrimination, and bias mitigation

AI programs should interact with a wide range of users to prevent inadvertent bias. If AI is allowed to run amok, it may result in bias or marginalization of some populations. Because not all groups are represented in the development of AI and because not all groups may use AI in the same way or to the same extent, bias might be challenging to eradicate. One crucial first step in reducing discrimination is to encourage inclusion and guarantee that everyone has access to it. Throughout the development and deployment phases, bias can be further mitigated by ongoing evaluations and modifications. Data publication can also encourage the use of impartial AI. It has also been demonstrated that utilizing a variety of data sets reduces the likelihood of bias.

Industry titans including Block, Canva, Carlyle, The Estée Lauder Companies, PwC, and Zapier are already utilizing Open AI's ChatGPT Enterprise, which was introduced a few months ago, to completely transform the way their businesses run. Introducing ChatGPT Team as a new self-serve option today. Access to sophisticated models, such as GPT-4 and DALL·E 3, and tools, such as Advanced Data Analysis, is provided by the chatGPT Team. It also comes with admin tools to manage a team and a specific collaborative workspace. One can own and control business data, just like with ChatGPT Enterprise.

Find some of our related studies:

Source: IBM

5. Diversity, non-discrimination, and bias mitigation

AI programs should interact with a wide range of users to prevent inadvertent bias. If AI is allowed to run amok, it may result in bias or marginalization of some populations. Because not all groups are represented in the development of AI and because not all groups may use AI in the same way or to the same extent, bias might be challenging to eradicate. One crucial first step in reducing discrimination is to encourage inclusion and guarantee that everyone has access to it. Throughout the development and deployment phases, bias can be further mitigated by ongoing evaluations and modifications. Data publication can also encourage the use of impartial AI. It has also been demonstrated that utilizing a variety of data sets reduces the likelihood of bias.

Industry titans including Block, Canva, Carlyle, The Estée Lauder Companies, PwC, and Zapier are already utilizing Open AI's ChatGPT Enterprise, which was introduced a few months ago, to completely transform the way their businesses run. Introducing ChatGPT Team as a new self-serve option today. Access to sophisticated models, such as GPT-4 and DALL·E 3, and tools, such as Advanced Data Analysis, is provided by the chatGPT Team. It also comes with admin tools to manage a team and a specific collaborative workspace. One can own and control business data, just like with ChatGPT Enterprise.

Find some of our related studies:

- Adaptive AI Market: https://www.knowledge-sourcing.com/report/adaptive-ai-market

- Generative AI Market: https://www.knowledge-sourcing.com/report/generative-ai-market

- Artificial Intelligence (AI) In Mental Health Market: https://www.knowledge-sourcing.com/report/ai-in-mental-health-market

Get in Touch

Interested in this topic? Contact our analysts for more details.

Latest Thought Articles

Top OSAT Companies Driving Semiconductor Assembly and Test Services Worldwide

Recently

EV Charging Stations Market Outlook: Smart Charging, Fast Charging, and Regional Expansion

Recently

Future of Corporate Wellness: Global Trends and Regional Outlook

Recently

Regional Breakdown of the Mechanical Keyboard Market: Who Leads and Why?

Recently